ChatGPT Voice

OpenAI

Interface design

2024

Parsing the interactions

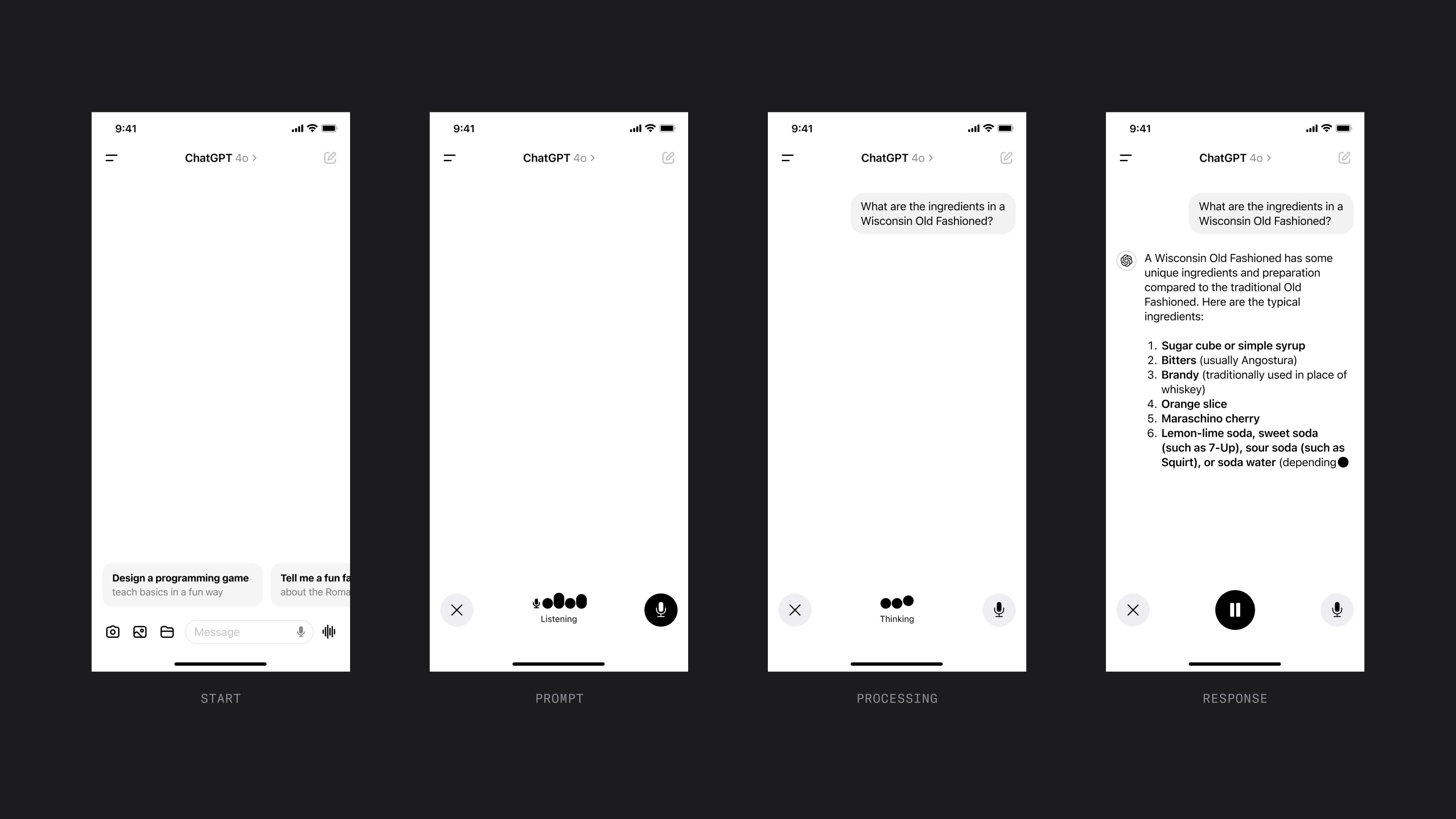

While I had the general idea of how a minimized voice mode UI could give room for the response text to be displayed concurrently, I needed to think through and account for all the voice mode states to see if this idea really had legs. I listed out all the states I could find from using ChatGPT and came up with the following:

Connecting: reaching ChatGPT servers to prep voice interactions

Connection failure

Listening: phone mic turns on and ChatGPT listens for voice prompt

Failure to hear or interpret prompt/no prompt

Toggle mic: states for manually turning the mic on and off

Hold mic: press and hold mic to prevent prompt interruption

Prompt received: user feedback that a prompt has been received

Thinking: user feedback that the prompt is being interpreted

Response: voice response and text display

Pause response: an option for the user to pause voice response

Minifying the UI

These states currently show various animations to provide user feedback, and each would need to be reimagined into a smaller space. I started with the idea of adding a mic button for voice input and keeping the existing mic status animations (the four moving dots), and then proceeded to figure out how to transition from the prompt state to the response state. This was accomplished by morphing the mic status indicator to “prompt received” and “thinking” feedback animations.

The next step was to figure out what the response state should be. A challenge with this was to include 1) the additional action of pausing the response and 2) some sort of animation that represents the AI talking (currently a pulsing chat bubble shape). It seemed sensible to merge these two things so that the pause button itself pulses with the voice response. I thought this was a solid solution, as it visually ties the pausing action to the voice animation.

One final thing to account for was the action of pressing and holding to stop interruptions of your prompt. This could be done simply by pressing and holding the mic button; however, some visual and potentially haptic indication should let the user know the mic button is being pressed. The solution I chose was for the button to enlarge, indicating it’s being held.

Final thoughts

I like the result, and I think it works, but the design may have veered a bit from my original vision once I worked through the solutions. The first image I had in my head regarding this idea was actually more along the lines of the “lyrics” mode in Spotify, where text lights up as the lyrics are sang. I wrestled with whether or not the text should appear in sync with the voice, but one of the main things this redesign is trying to solve is allowing the user to skip ahead in the text. You could display the text word for word with the voice response and have a “skip” button that stops the voice and shows the rest of the text, but that really wouldn’t be too different from the current UI. I opted instead to show all of the text right away, and the user can choose to keep playing or pause the voice. Still, should there be some other way of highlighting the words as they’re being spoken?

One other thing I reflected on was the use, or nonuse of color. I definitely wanted to maintain ChatGPT’s minimalism, and I debated if color is necessary for status indications like mic on/off and pause (currently red). Ultimately, I opted out, but I think this is certainly arguable.

Hope you enjoyed this concept! Would love to know what you think of it. Send me a tweet with any thoughts or feedback.